Recommended

This past fall, I challenged myself and hopped on the machine learning bandwagon. It’s been quite the ride.

For those not familiar with the field, machine learning is essentially the art of making predictions with computers. Furthermore, it is a HOT field.

Researchers are using machine learning for applications ranging from creating self-driving cars to diagnosing cancer to beating humans at playing Go. On top of that, the field has a pretty cool sounding name. “Machine Learning”: sure sounds futuristic and mysterious to me.

Suffice to say that with all this buzz being created about machine learning, it sometimes feels like everyone and their mother is trying to get in on it. It especially felt that way when I showed up to the first day of my machine learning class and found one of the largest auditoriums at MIT packed full of eager graduate students.

Seeing the wall to wall faces all around me, a slight wave of apprehension set in. Was this class going to be ridiculously competitive? Was I really qualified for this class? How the hell did I end up taking this class? Better yet, how did I even end up at MIT?

Part of my fears stemmed from my interdisciplinary background. I am in the PhD program in Computational and Systems Biology or CSB (pronounced sizz-bee). CSB is a great fit for me as my academic background is a mishmash of chemistry, math, biology, and computer science. However, being in an interdisciplinary field, I sometimes catch myself feeling like a jack of many trades, master of none.

That feeling set in fast on my first day of machine learning class. I realized that while I knew much of the prerequisite material, there were some sizable holes in my knowledge, and I would need to do a lot of catch up. In short, I foresaw the bumpy ride ahead of me.

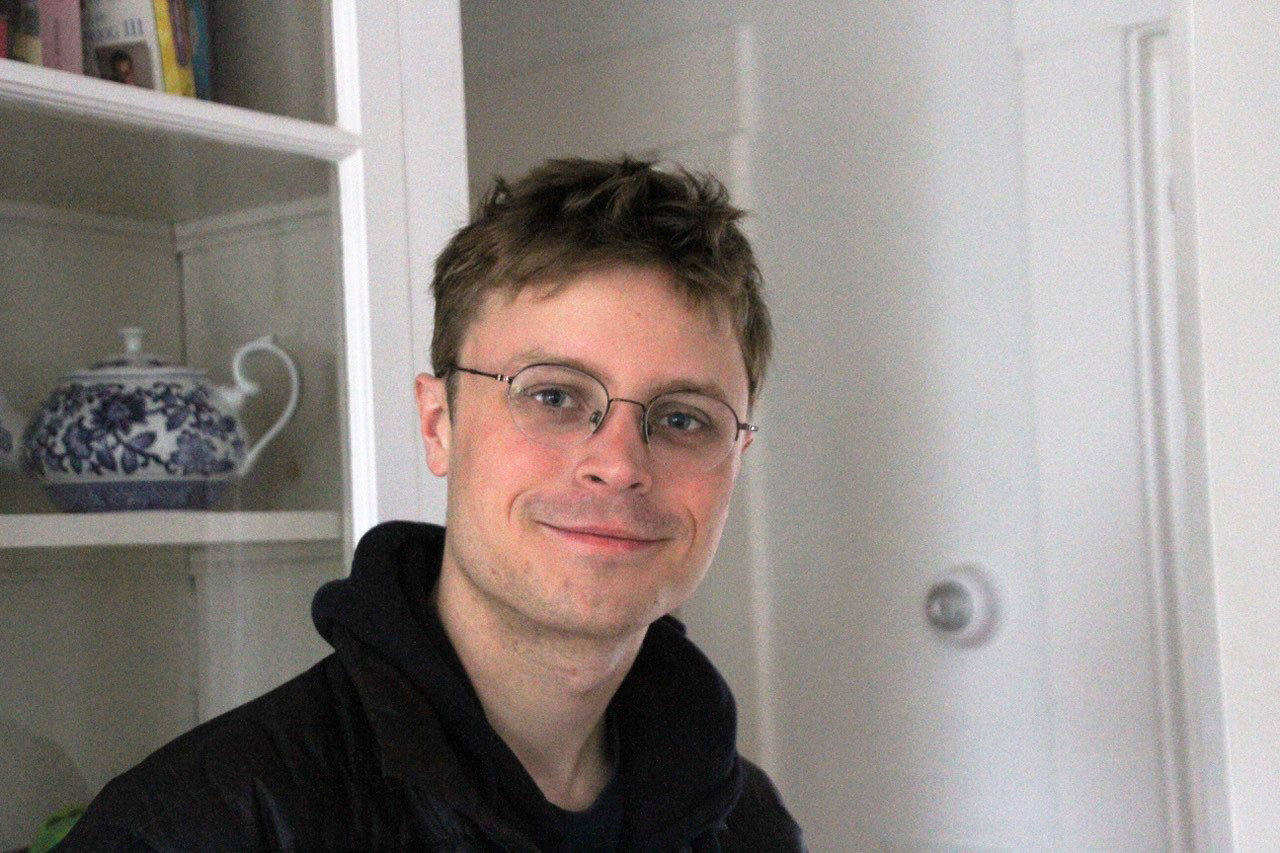

And bumpy it was. Take the first exam for example. If a car ride is used a metaphor for my machine learning experience, then I think this photo depicting an anonymous classmate’s reaction to the first exam aptly expresses just how riddled with bumps the ride was.

But while the journey may have been wild, I’m quite glad I set out on it. I hung on through the twists and turns of the class, and by the end I’d gained a new appreciation for machine learning. I even learned enough to develop a nerdy machine learning metaphor for how to make it through my class. Let me explain.

First, let me remind you that machine learning is all about teaching computers to make predictions. For example, suppose that for some reason we want to teach a computer to recognize whether an image is either of a doggo (i.e. dog) or of a manatee.

One of the largest difficulties in machine learning is that it’s easy to make predictions that are too specific to the provided data and don’t generalize well.

For example, if we only provide a computer with images of golden retrievers (labeled as doggos) and bluish gray manatees (labeled as manatees), the computer might begin to use the fact that the golden retrievers are gold to classify them as dogs. However, this would be bad, because if we then introduced a photo of a black labrador, the computer may be unable to tell that it’s a dog since its not golden.

The way around this problem is to use a variety of techniques (with names such as regularization, dropout, and more) that essentially make the machine learning predictions worse on the data provided so that the predictions might just be better on the data the computer hasn’t yet seen.

I find myself drawn to this idea that sometimes you need to make things a bit worse in order to make them better in the long run. It’s also an excellent metaphor for how to cope with the difficulties of life (such as a particularly challenging machine learning class).

While machine learning was probably more work than my other two classes combined, optimizing my life solely around doing machine learning work wouldn’t have led to best outcome in the class. I would have burnt out.

Instead, I made my optimization process slightly less efficient in the short run by making sure I slept well, ate well, exercised, and had a social life. In essence, I implemented regularization in my own life.

In the long run, this paid off as I rode out the bumps, passed the class, and learned more than even an extremely sophisticated deep learning method than I could ever have predicted.

Grad Life blog posts offer insights from current MIT graduate students on Slice of MIT.

This post originally appeared on the MIT Graduate Admissions student blogs.